OpenCV3.3 has come along with better support for deep-learning. The DNN (Deep-learning Neuron Network) module has been moved from opencv_contrib repository to main repository and therefor became a standard part of OpenCV. Current release supports the backend and model IO of Caffe, TensorFlow, Torch and PyTorch. You can pick any of them to build your deep-learning powered computer vision applications, of course, with much less work to do.

This blog trys to get your hands ready for Deep Learning with OpenCV, if you are a beginner like me.

1) Remove libgtk-3-dev, use libgtk-2.0-dev instead, because libgtk-3-dev depends on libmirprotobuf-dev. As we need to recompile protobuf, any existing older version of protobuf may cause conflicts.

sudo apt-get remove libgtk-3-dev

sudo apt-get install libgtk2.0-dev

2) Remove libprotobuf-dev, libmirprotobuf-dev

sudo apt-get remove libprotobuf-dev libmirprotobuf-dev

sudo rm -rf /usr/include/google/protobuf

sudo rm -rf /usr/lib/libprotobuf*

sudo rm -rf /usr/local/include/google/protobuf

sudo rm -rf /usr/local/lib/libprotobuf*

3) Install protobuf build dependencies

sudo apt-get install autoconf automake libtool curl make g++ unzip

4) Download protobuf sources and generate configuration

git clone https://github.com/google/protobuf.git

cd protobuf

./autogen.sh

5) Configure protobuf build profile

gedit configureMake some changes from line 2659 to 2664 (line numbers depend on your source version):

Original:

if test "x${ac_cv_env_CFLAGS_set}" = "x"; then :

CFLAGS=""

fi

if test "x${ac_cv_env_CXXFLAGS_set}" = "x"; then :

CXXFLAGS=""

fi

Modified:

if test "x${ac_cv_env_CFLAGS_set}" = "x"; then :

CFLAGS="-fPIC"

fi

if test "x${ac_cv_env_CXXFLAGS_set}" = "x"; then :

CXXFLAGS="-fPIC"

fi

6) Make & Install

make -j`nproc`

sudo make install

7) Test

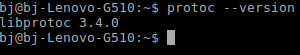

a) Check protobuf compiler version:

protoc --version

You should get this:

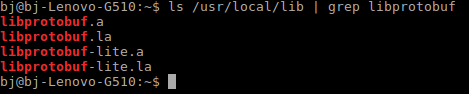

b) Check protobuf static lib installation:

ls /usr/local/lib | grep libprotobuf

You should get this:

1) Disable OpenCV and check your own python include path (with numpy)

USE_OPENCV := 0

PYTHON_INCLUDE := /usr/include/python2.7 \

/usr/local/lib/python2.7/dist-packages/numpy/core/include

2) For customizing, follow the official installation guide: http://caffe.berkeleyvision.org/installation.html

3) If errors occurs during the installation, refer to this page: http://blog.csdn.net/hongye000000/article/details/51043913

1) Download sources:

2) For more detailed information, please refer to: http://www.linuxfromscratch.org/blfs/view/svn/general/opencv.html

3) In opencv-3.3.0/modules/python/python2/CmakeLists.txt, add one line:

if(NOT PYTHON2_INCLUDE_PATH OR NOT PYTHON2_NUMPY_INCLUDE_DIRS)

ocv_module_disable(python2)

endif()

set(the_description "The python2 bindings")

set(MODULE_NAME python2)

# Buildbot requires Python 2 to be in root lib dir

set(MODULE_INSTALL_SUBDIR "")

set(PYTHON PYTHON2)

include(../common.cmake)

# line added. Don’t diretly copy and paste, use your own caffe path

include_directories(/home/bj/caffe/distribute/include)

unset(MODULE_NAME)

unset(MODULE_INSTALL_SUBDIR)

4) CMake options

cmake -DCMAKE_BUILD_TYPE=Release -

DOPENCV_EXTRA_MODULES_PATH=<opencv_contrib/modules> -

DCaffe_INCLUDE_DIR=~/caffe/distribute/include -

DCaffe_LIB_DIR=~/caffe/distribute/lib -

DENABLE_PRECOMPILED_HEADERS=OFF -DBUILD_PERF_TESTS=OFF -

DBUILD_TESTS=OFF -DENABLE_CXX11=ON -DBUILD_opencv_python2=ON

5) Make & Install

First, clean old opencv header files and libs:

sudo apt-get remove libopencv-dev

sudo rm /usr/local/include/opencv* -rf

sudo rm /usr/local/lib/libopencv*

In your cmake build directory:

make -j4

sudo make installa) For Python user:

# please refer to: http://www.pyimagesearch.com/2017/08/21/deep-learning-with-opencv/

# for detail

import numpy as np

import argparse as ap

import time

import cv2

# parse command line arguments

arp = ap.ArgumentParser()

arp.add_argument("-i", "--image", required=False,

help="path to input image")

arp.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

arp.add_argument("-m", "--model", required=True,

help="path to Caffe pretrained model")

arp.add_argument("-l", "--labels", required=True,

help="path to ImageNet labels (i.e., syn-sets)")

args = vars(arp.parse_args())

# Use live demo if image is not given

cap = cv2.VideoCapture()

if (args["image"] is None) :

cap.open(0)

else :

cap.open(args["image"])

if (cap.isOpened() == False) :

print("ERROR: Fail to capture")

exit(0)

# set opencv video capture properties

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 224)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 224)

# load labels

rows = open(args["labels"]).read().strip().split("\n")

classes = [r[r.find(" ") + 1:].split(",")[0] for r in rows]

# load model

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

# loop

while True:

# read new frame

ret, image = cap.read()

if (image is None) : break

# create blob from image

blob = cv2.dnn.blobFromImage(image, 1, (224, 224), (104, 117, 123))

# assign net input

net.setInput(blob)

# time before forwarding

start = time.time()

# forward

preds = net.forward()

# time after forwarding

end = time.time()

# show classification time cost

print("[INFO] classification took {:.5} seconds".format(end - start))

# find the top 3 scored classes

idxs = np.argsort(preds[0])[::-1][:3]

for (i, idx) in enumerate(idxs):

# mark the top one

if i == 0:

text = "Label: {}, {:.2f}".format(classes[idx], preds[0][idx])

cv2.putText(image, text, (5, 25), cv2.FONT_HERSHEY_SIMPLEX,

0.7, (0, 0, 255), 2)

# for each class in top 3, print the info

print("[INFO] {}. label: {}, probability: {:.5}".format(i + 1,

classes[idx], preds[0][idx]))

# show image

cv2.imshow("cvdnn_py", image)

cv2.waitKey(1)

# before exiting

cv2.waitKey(0)

cap.release()

cv2.destroyAllWindows()

# end of file

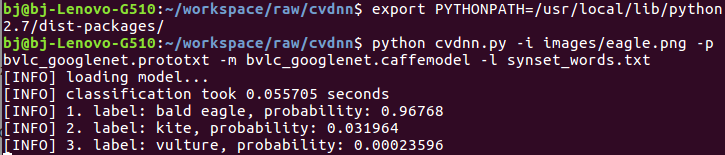

Command line:

Result:

b) For C++ user:

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include

#include

#include

#include

// command line help

void help()

{

std::cout << "Usage: cvdnn " << std::endl;

}

// load labels from file

bool load_labels(const std::string &path, std::vector &labels)

{

std::ifstream label_stream(path.c_str());

if (!label_stream)

return false;

while (!label_stream.eof())

{

std::string line;

std::getline(label_stream, line);

size_t label_beg_idx = line.find(" ") + 1;

size_t label_end_idx = line.find(",", label_beg_idx + 1);

if (label_end_idx == std::string::npos)

label_end_idx = line.length();

size_t label_len = label_end_idx - label_beg_idx;

std::string label = line.substr(label_beg_idx, label_len);

labels.push_back(label);

}

label_stream.close();

return true;

}

int main(int argc, char **argv)

{

if (argc != 5)

{

help();

return -1;

}

// std string for each argument

std::string image_path(argv[1]);

std::string proto_path(argv[2]);

std::string model_path(argv[3]);

std::string label_path(argv[4]);

// load labels from file

std::vector labels;

if (!load_labels(label_path, labels))

{

std::cout << "ERROR: cannot open label file: " << label_path << std::endl;

return -1;

}

// read image

cv::Mat image = cv::imread(image_path);

if (image.empty())

{

std::cout << "ERROR: cannot open image file: " << image_path << std::endl;

return -1;

}

// create blob from image

cv::Mat blob = cv::dnn::blobFromImage(image, 1, cv::Size(224, 224), cv::Scalar(104, 117, 123));

// read net from caffe

cv::dnn::Net net = cv::dnn::readNetFromCaffe(proto_path, model_path);

// set net input

net.setInput(blob);

// time tick before forwarding

double t = cv::getTickCount();

// forward

cv::Mat probs = net.forward();

// calculate time cost

t = (cv::getTickCount() - t) / cv::getTickFrequency();

// show forward time cost

std::cout << "[INFO] classification took " << (int)t << " seconds" << std::endl;

// debug

//std::cout << "[INFO] probs.rows: " << probs.rows << ", probs.cols: " << probs.cols << std::endl;

// sort result

cv::Mat idxs;

cv::sortIdx(probs, idxs, cv::SORT_EVERY_ROW + cv::SORT_DESCENDING);

// debug

//std::cout << "[INFO] idxs.rows: " << idxs.rows << ", idxs.cols: " << idxs.cols << std::endl;

// show the top 3 scored classes

for (int i = 0; i < 3; i++)

{

int idx = idxs.at(0, i);

std::string label = labels[idx];

float prob = probs.at(0, idx);

std::stringstream ss;

ss << label << ": " << prob;

std::string text = ss.str();

std::cout << "[INFO] " << (i + 1) << ". " << text << std::endl;

if (i == 0)

cv::putText(image, text, cv::Size(5, 25), cv::FONT_HERSHEY_SIMPLEX, 0.7, cv::Scalar(0, 0, 255), 2);

}

cv::imshow("cvdnn_cpp", image);

cv::waitKey(0);

cv::destroyAllWindows();

return 0;

}

// end of file

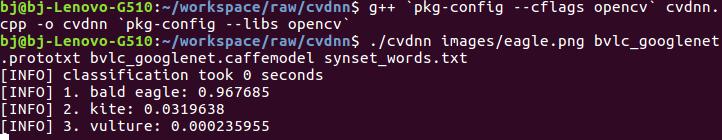

Command line:

Result:

The example code and dataset above were mostly borrowed from Deep Learning with OpenCV Tutorials By Adrian Rosebrock and restored in github.

帮杰

疯狂于web和智能设备开发,专注人机互联。

HOW DO I DIY MY OWN REMOTE CONTROL SWITCH IN MY DORMITORY

THE SIMPLEST KALMAN FILTER IN THE WORLD

COMPUTING MEAN AND VARIANCE RECURSIVELY

LEARNING GOOGLE TENSORFLOW [L1: MAKE THE FIRST ACUAINTANCE]